We often emphasize that application-layer developers should anticipate the future evolution of large models—avoid building features that models will soon handle natively. Otherwise, model upgrades could render those efforts obsolete. So, how will large models evolve? Below are six insights on their future capabilities from my perspective

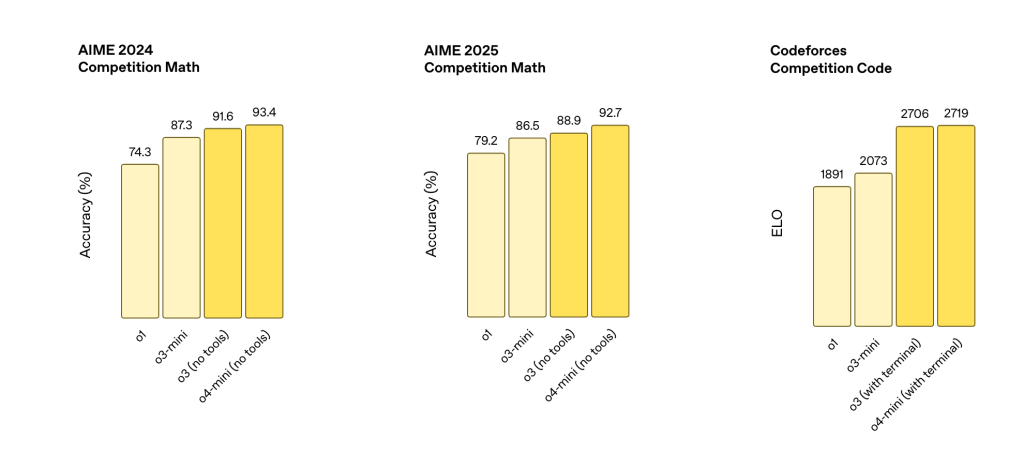

1. The intellectual capabilities of large models are becoming more aligned with the needs of real-world generative environments as evaluation systems are optimized.

Currently, the intelligence of large models is tested by having them take various exams.

On one hand, their scores are steadily improving, but on the other hand, there is a growing need to develop evaluation frameworks better suited for production environments. Through reinforcement learning, models can achieve stronger generalization capabilities. The “intelligence” demonstrated in exams reflects fragmented skills, whereas production environments require systematic problem-solving abilities (e.g., a legal model may pass the bar exam but fail to grasp a client’s ambiguous request). Real-world scenarios demand continuous decision-making, human-machine collaboration, and long-term memory.

In the future, the foundational models will continue to iterate and optimize in these two directions.

2. Models Will Increasingly Converge Towards Unification

Language models, vision models, and action models will eventually evolve into a unified large model. Currently, these models are developed separately, but in the future, they will gradually merge into a single, cohesive system. This is because humans process text, images, and sound using the same neural architecture—while the brain has specialized regions like the visual and auditory cortices, information processing is fundamentally multimodal and jointly represented.

From the perspective of AI development history, model unification aligns with the natural progression of technology. Early AI research treated different perceptual and cognitive functions separately, similar to the modular theories in early neuroscience. However, with the rise of deep learning—particularly the Transformer architecture—multimodal fusion became possible.

Historically, this evolution is evident:

- 1957: Early perceptrons could only handle simple, single-type pattern recognition.

- 1990s: Expert systems focused solely on domain-specific knowledge.

- Post-2010: Deep learning models achieved breakthroughs in speech (e.g., DeepSpeech), vision (e.g., AlexNet), and text (e.g., Word2Vec).

- 2017: The Transformer architecture laid the foundation for multimodal unification.

- 2021-2023: Multimodal large models like GPT-4, Claude 3, and Gemini achieved unified understanding of text and images.

Under the multimodal framework, input processing is already multimodal, but output remains relatively limited. In the future, models should generate mixed outputs—sometimes an image is worth a thousand words, and a video conveys even richer information. This trend is already visible in cutting-edge AI systems:

- GPT-4V: Processes both text and image inputs, understands visual content, and performs reasoning.

- Gemini: Google’s multimodal model handles text, images, audio, and video.

- DALL·E 3: Generates high-quality images from text descriptions, demonstrating deep language-vision fusion.

- Claude 3 Opus: Analyzes charts, interprets text within images, and tackles complex multimodal tasks.

Future models will not only process multimodal inputs but also generate multimodal outputs, dynamically choosing the most suitable format (text, image, audio, or video) for each task. Architectures will become more flexible, allocating attention across modalities as needed, achieving a true “perception-understanding-action” loop—akin to human cognition.

Recent breakthroughs like Veo 3 highlight this trend in multimodal output capabilities. Veo 3’s standout innovation is its native integration of audio generation via V2A (Video-to-Audio), enabling the model to “watch scenes and hear sounds” like humans, automatically adding music and sound effects to silent videos.

3. AI and Human Intelligence Share Commonalities but Also Key Differences—Future Integration

Current AI intelligence is akin to “memorizing thousands of documents”—it relies on vast training data and human annotations but struggles with generalization for unseen scenarios. Reinforcement learning may unlock capabilities beyond human intelligence, but ultimately, AI and human intelligence will coexist with both overlaps and distinctions.

While AI is biologically inspired, its implementation, strengths, and mechanisms differ significantly. AI excels in computational speed, large-scale data processing, and precision, whereas biological intelligence (BI) outperforms in energy efficiency, adaptability, robustness, few-shot learning, and integrating emotions or consciousness.

Rather than competing, BI and AI will combine to form a more powerful intelligence. Future AI will adopt human-like learning mechanisms (e.g., few-shot learning, causal reasoning), while humans will enhance cognition via assistive technologies, creating “augmented intelligence.” Human-AI collaboration will redefine workflows: AI handles data-intensive tasks, while humans focus on creativity and value judgments, jointly driving societal progress.

4. Models, Data, and Actions Converge into a Trinity

As Dr. Qi Lu and others have emphasized, AI is evolving beyond pure reasoning—it now thinks, uses tools, and acts, forming a trinity of intelligence (models), experience (data), and action (tool usage).

This integrated intelligence is inevitable in AI’s evolution. Historically, early AI systems were siloed—expert systems focused on knowledge representation, robotics on movement, and ML on data learning. Modern AI breaks these boundaries, creating end-to-end perception-cognition-action systems. Key milestones include:

- Early expert systems lacked autonomous learning/action.

- Robotics initially had limited cognition.

- ML systems passively learned without active application.

- Today’s Agent technology embodies the trinity.

Notable examples:

- AutoGPT: Plans, executes, and learns from outcomes autonomously.

- AgentGPT: Uses tools, searches information, and completes complex tasks.

- Tesla FSD: Integrates real-time perception, decision-making, and control for full self-driving.

Future agents will:

- Operate more autonomously in complex tasks.

- Continuously optimize via closed-loop learning.

- Master dynamic tool usage and multi-agent collaboration.

This trinity transforms AI from passive tools into active partners.

5. AI as a Discovery Tool: Revolutionizing Scientific Research

AI is transitioning from a research aid to an active participant in scientific breakthroughs, potentially accelerating major discoveries.

Historically, science relied on human intuition and experimentation. AI is reshaping this paradigm:

- 1990s: Computer-aided drug design (limited to assistance).

- 2000s: ML analyzed astronomical data for new phenomena.

- 2015: AI began autonomously designing/executing experiments.

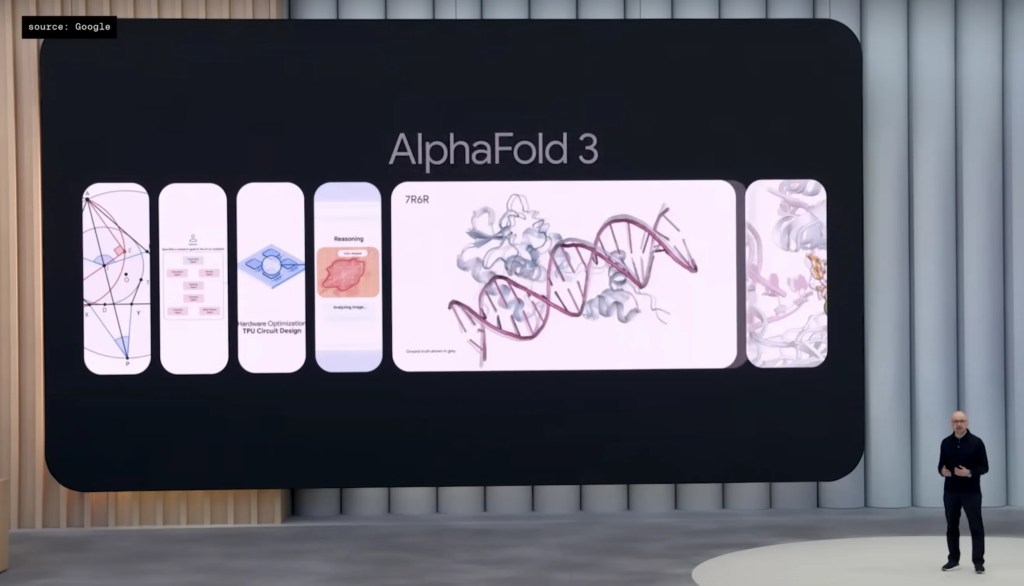

- 2020: AlphaFold solved protein folding, a 50-year challenge.

Key examples:

- AlphaFold: Predicts protein structures, revolutionizing biology and drug development.

- AI Drug Discovery: Insilico Medicine designed novel drug molecules in weeks.

- Materials Science: AI predicts new material properties (e.g., for energy).

- Astronomy: NASA’s ML discovered exoplanets in Kepler data.

Future AI will:

- Propose hypotheses and design validation experiments.

- Cross-disciplinary insights beyond human perception.

- Redefine scientist-AI collaboration, potentially creating new research paradigms.

(Recent Google I/O also highlighted progress here.)

6. Intelligence-Hardware Integration Expands Capabilities

With advancing models, many hardware devices can be reimagined—from early robot vacuums to last year’s viral pool-cleaning robots, the Plaud memo-recording card, and OpenAI’s rumored AI hardware with Jony Ive. This trend showcases endless innovation potential.

Leave a comment